Our expressions under the algorithmic microscope

Mohamed Daoudi, a researcher at IMT Lille Douai, is interested in the recognition of facial expressions in videos. His work is based on geometrical analysis of the face and machine learning algorithms. They may pave the way for applications in the field of medicine.

Anger, sadness, happiness, surprise, fear, disgust. Six emotions which are represented in humans by universal facial expressions, regardless of our culture. This was proven in Paul Ekman’s work, published in the 60s and 70s. Fifty years on, scientists are using these results to automate the recognition of facial expressions in videos, using algorithms for analyzing shapes. This is what Mohamed Daoudi, a researcher at IMT Lille Douai, is doing, using computer vision.

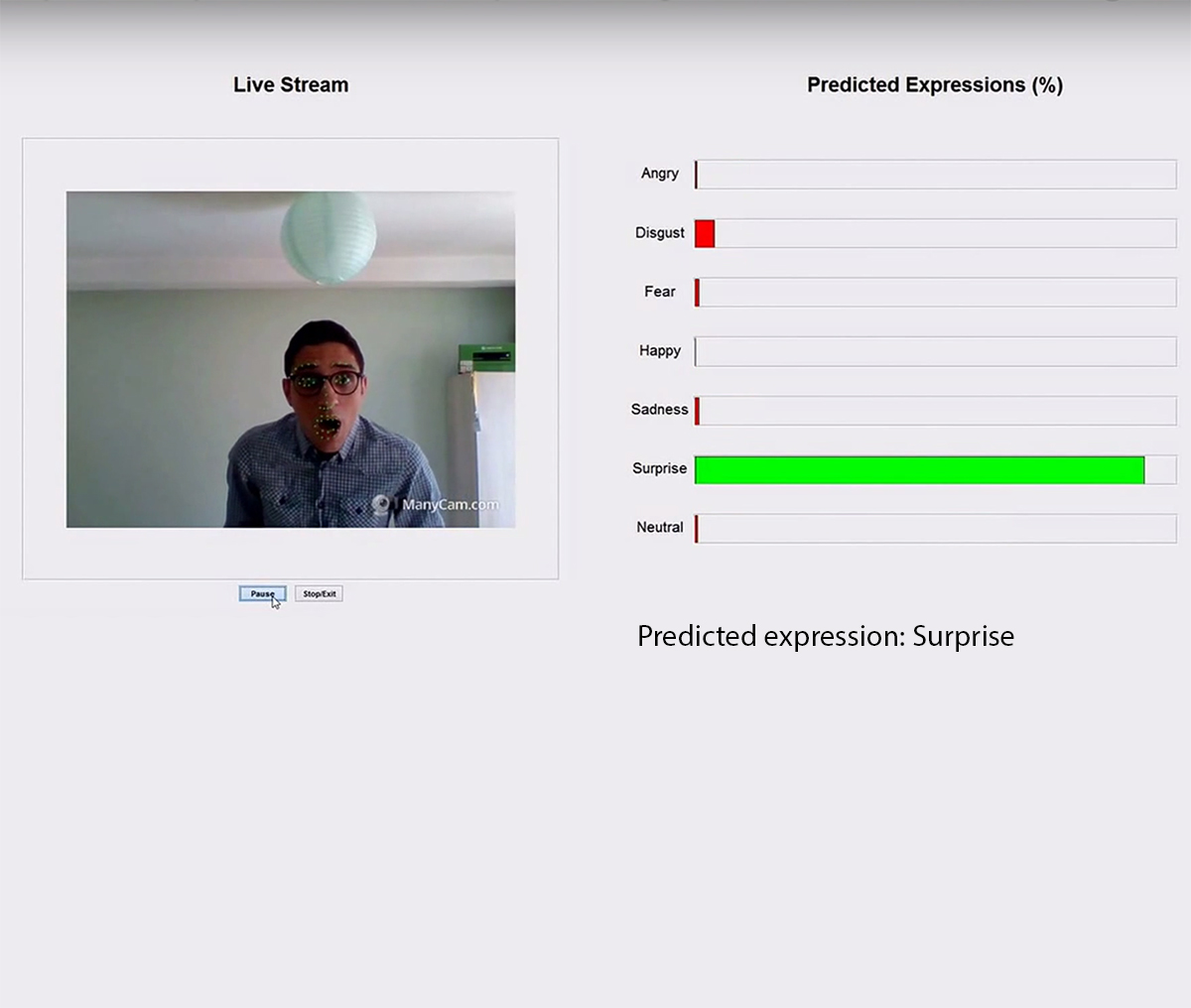

We are developing digital tools which allow us to place characteristic points on the image of a face: in the corners of the lips, around the eyes, the nose, etc.” Mohamed Daoudi explains. This operation is carried out automatically, for each image of a video. Once this step is finished, the researcher has a dynamic model of the face in the form of points which change over time. The movements of these points, as well as their relative positions, give indications on the facial expressions. As each expression is characteristic, the way in which these points move over time corresponds to an expression.

The models created using points on the face are then processed by machine learning tools. “We train our algorithms on databases which allow them to learn the dynamics of the characteristic points of happiness or fear” Mohamed Daoudi explains. By comparing new measurements of faces with this database, the algorithm can classify a new video analysis of an expression into one of six categories.

This type of work is of interest to several industrial sectors. For instance, for observing customer satisfaction when purchasing a product. The FUI Magnum project has taken an interest in the project. By observing a customer’s face, we could detect whether or not his experience was an enjoyable one. In this case, it is not necessarily about recognizing a precise expression, but more about describing his state as either positive or negative, and to what extent. “Sometimes this is largely sufficient, we do not need to determine whether the person is sad or happy in this type of situation” highlights Mohamed Daoudi.

The IMT Lille Douai researcher highlights the advantages of such a technology in the medical field, for example: “in psychiatry, practitioners look at expressions to get an indication of the psychological state of a patient, particularly for depression.” By using a camera and a computer or smartphone to help analyze these facial expressions, the psychiatrist can make an objective evaluation of the medication administered to the patient. A rigorous study of the changes in their face may help to detect pain in some patients who have difficulty expressing it. This is the goal of work by PhD student Taleb Alashkar, whose thesis is funded by IMT’s Futur & Ruptures (future and disruptive innovation) program and supervised by Mohamed Daoudi and Boulbaba Ben Amor. “We have created an algorithm that can detect pain using 3D facial sequences” explains Mohamed Daoudi.

The researcher is careful not to present his research as emotional analysis. “We are working with recognition of facial expressions. Emotions are a step above this” he states. Although an expression relating to joy can be detected, we cannot conclude that the person is happy. For this to be possible, the algorithms would need to be able to say with certainty that the expression is not faked. Mohamed Daoudi explains that this remains a work in progress. The goal is indeed to introduce emotion into our machines, which will become increasingly intelligent.

[box type=”info” align=”” class=”” width=””]

From 3D to 2D

To improve facial recognition in 2D videos, researchers incorporate algorithms used in 3D for detecting shape and movement. Therefore, to study faces in 2D videos more easily, Mohamed Daoudi is capitalizing on the results of the ANR project Face Analyser, conducted with Centrale Lyon and university figures in China. Sometimes the changes are so small they are difficult to classify. This therefore requires creating digital tools making it possible to amplify them. With colleagues at the University of Beihang, Mohamed Daoudi’s team has managed to amplify the subtle geometrical deformations of the face to be able to classify them better.[/box]

Leave a Reply

Want to join the discussion?Feel free to contribute!