Computerizing scripts: losing character?

With the use of the Internet and new technologies, we rely primarily on writing to communicate. This change has led to a renewed interest in graphemics, the linguistic study of writing. Yannis Haralambous, a researcher in automatic language processing and text mining at IMT Atlantique helped organize the /gʁafematik/ conference held in June 2018. In this article, we offer a look at the research currently underway in the field of graphemics and address questions about the future of computerized scripts in Unicode encoding, that will decide the future of languages and their characters…

Graphemics. Never heard this word before? That’s not surprising. The word refers to a linguistic study similar to phonology, except that it focuses on a language’s writing system, an area that was long neglected as linguistic study tended to focus more on the spoken than on the written word. Yet the arrival of new technologies, based strongly on written text, has changed all that and explains the renewed interest in the study of writing. “Graphemics is of interest to linguists just as much as it is to computer engineers, psychologists, researchers in communication sciences and typographers,” explains Yannis Haralambous, a researcher in computerized language processing and text mining at IMT Atlantique. “I wanted to bring all these different fields together by organizing the/gʁafematik/ conference on June 14 and 15, 2018 on graphemics in the 21st century.”

Among those in attendance were typographers who came to talk about the readability of typographic characters on a screen. Based on psycholinguistic field studies, they work to design fonts with specific characters for children or people with reading difficulties. At the same time, several researchers presented work on the use of emojis. This research reveals how these little pictographs no larger than letters can enrich the text with their emotional weight or replace words and even entire sentences, changing the way we write on social networks. In addition, the way we type with keyboards and the speed of our typing could be used to create signatures for biometric identification, with possible applications in cyber-security or in the diagnosis of disorders like dyslexia.

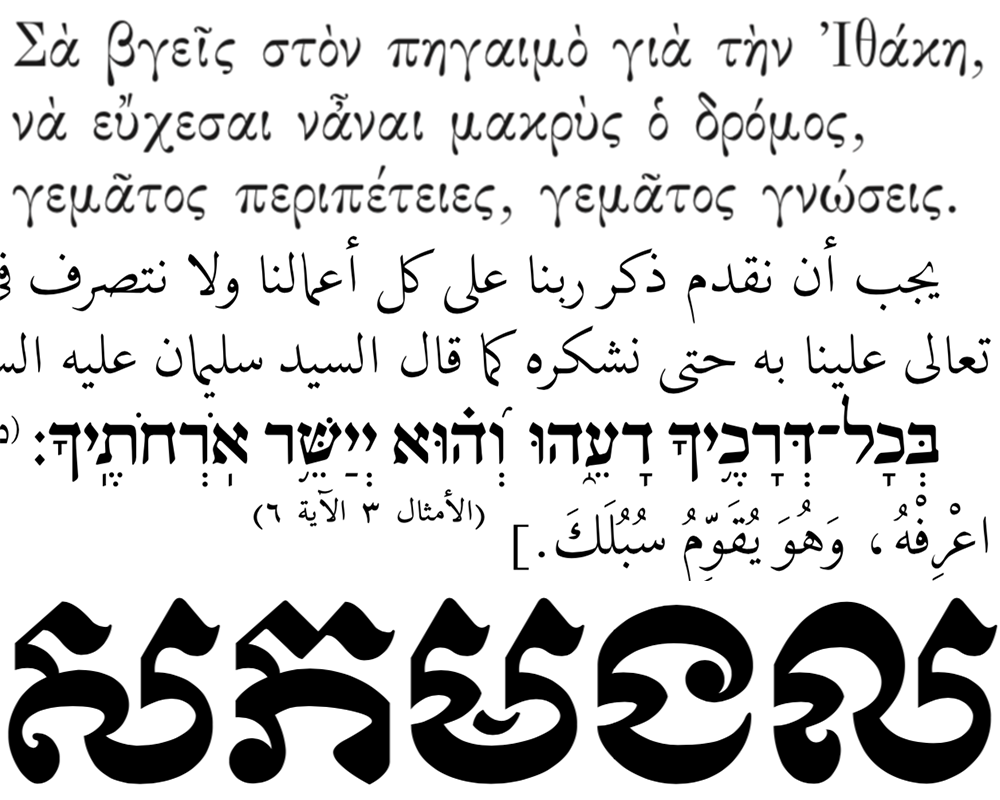

Yet among all the research presented at this conference, one leading actor in written language stole the show: Unicode. Anyone who types on a computer, whether they know it or not, is constantly dealing with this generalized and universal character encoding. But its goal of integrating all the world’s languages is not without consequences on the development of writing systems and particularly for “sparse” modern languages and dead languages.

Questioning the encoding system’s dominance

Type a key on your keyboard, for example the letter “e”. A message is sent to your machine in the form of a number, a numeric code, received by the keyboard driver and immediately converted into digital information called Unicode character. This is the information that is then stored or transmitted. At the same time, the screen driver interprets this Unicode character and displays an image of the letter “e” in your text processing area. “If you change the virtual keyboard in your machine’s system preferences to Russian or Chinese, the same key on the same physical keyboard will produce different characters from other writing systems proposed by Unicode,” explains Yannis Haralambous.

Unicode is the result of work by a consortium of actors in the IT industry like Microsoft, Apple and IBM. Today it is the only encoding system that has been integrated worldwide. “Because it is the only system of its kind, it bears all the responsibility for how scripts develop,” the researcher explains. By accepting to integrate certain characters into its directory while rejecting others, Unicode controls the computerization of scripts. While Unicode’s dominance does not pose any real threat to “closed” writing systems like the French language, in which the number of characters does not vary, “open” writing systems are likely to be affected. “Every year, new characters are invented in the Chinese language, for example, and the Unicode consortium must follow these changes,” the researcher explains. But the most fragile scripts are those of dead and ancient languages. Once the encoding proposals made by reports from enthusiasts or specialists have been accepted by the Unicode consortium, they become final. The recorded characters can no longer be modified or deleted. For Yannis Haralambous, this is incomprehensible. “Errors are building up and will forever remain unchanged!”

Also, while the physical keyboard does not naturally lend itself to the computerized transcription of certain languages, like Japanese or Chinese, with thousands of different characters, the methods used for phonetic input and the system that suggests the associated characters help by-pass this initial difficulty. Yet certain Indian scripts and Arabic script are clearly affected by the very principle of cutting the script up into characters: “The same glyphs can be combined in different ways by forming ligatures that change the meaning of the text,” Yannis Haralambous explains. But Unicode does not share this opinion: because they are incompatible with its encoding system, these ligatures are seen as purely aesthetic and therefore non-essential… This results in a loss of meaning for some scripts. But Unicode also plays an important role in a completely different area.

A move towards the standardization of scripts?

While we learn spoken language spontaneously, written language is taught. “In France, the national education system has a certain degree of power and contributes to institutionalizing the written language, as do dictionaries or the Académie Française,” says Yannis Haralambous. In addition to language policies, Unicode strongly affects how the written language develops. “Unicode does not seek to modify or to regulate, but rather implement. However, due to its constraints and technical choices, the system has the same degree of power as the institutions.” The researcher lists the trio that, in his opinion, offer a complete view of writing systems and their development: the linguistic study of the script, its institutionalization, and Unicode.

The example of Malayalam writing, in the Indian state of Kerala, clearly shows the important role character encoding and software play in how a population adopts a writing system. In the 1980s, the government implemented a reform to simplify the Malayalam ligature script to make it easier to print and computerize. Yet the population kept its handwritten script with complex ligatures. When Unicode emerged in 1990, Mac and Windows operating systems only integrated the simplified institutional version. What changed things was the simultaneous development of open source software, which made the script with traditional ligatures available on computers. “Today, after a period of uncertainty at the beginning of the 2000s, the traditional writing has made a strong comeback,” the researcher observes, emphasizing the unique nature of this case: “The writing reforms imposed by a government are usually definitive, unless a drastic political change occurs.”

Traditional Malayalam script. Credit: Navaneeth Krishnan S

While open source software and traditional writing won in the case of Malayalam script, are we not still headed towards the standardization of writing systems due to the computerization of languages? “Some experts claim that in a century or two, we will speak a mixture of different languages with a high proportion of English, but also Chinese and Arabic. And the same for writing systems, with Latin script finally taking over… But in reality, we just don’t know,” Yannis Haralambous explains. The researcher also points out that the transition to Latin script has been proposed in Japan and China for centuries without success. “The mental patterns of Chinese and Japanese speakers are built on the characters: In losing the structure of characters, they would lose the construction of meaning…” While language does contribute to how we perceive the world, the role of the writing system in this process has yet to be determined. This represents a complex research problem to solve. As Yannis Haralambous explains, “it is difficult to separate spoken language from written language to understand which influences our way of thinking the most…”

Leave a Reply

Want to join the discussion?Feel free to contribute!